U.S. Department of Transportation

Federal Highway Administration

1200 New Jersey Avenue, SE

Washington, DC 20590

202-366-4000

The FHWA CDIP program manager followed up with the six States that participated during 2010 and 2011.

These States were:

Of the remaining four States, two (Vermont and District of Columbia) have been contacted by the contract team to obtain preliminary follow-up results, and the other two (California and North Carolina) were too recent to be able to comment on project implementations based on the CDIP recommendations. Because of the preliminary nature of the follow-up results obtainable from these four States, their results are not reflected in the following discussion.

Table 2 presents a summary of all results, showing the status of recommendations in each of the seven main sections of the CDIP reports.

Table 1: Status of recommendations in six States.

| Status | Crash Production Process | Timeliness | Accuracy | Complete- ness | Uniformity | Integration | Accessibility | Total |

|---|---|---|---|---|---|---|---|---|

| Implemented/ Partial | 23 | 9 | 4 | 5 | 6 | 3 | 4 | 54 |

| Not Implemented | 13 | 4 | 5 | 5 | 0 | 3 | 7 | 37 |

| Plan to Implement | 21 | 11 | 11 | 10 | 10 | 8 | 6 | 77 |

| Total | 57 | 24 | 20 | 20 | 16 | 14 | 17 | 168 |

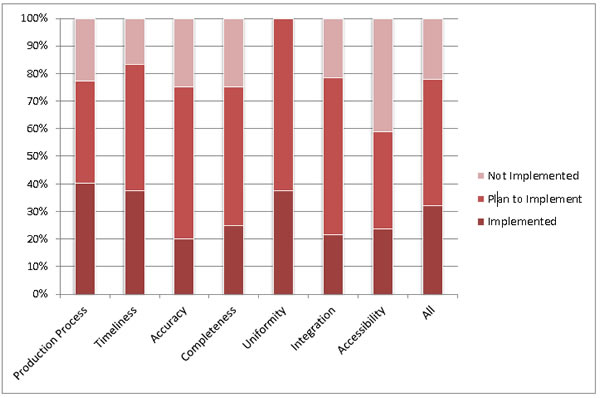

Figure 10 shows the percentages of recommendations for each CDIP section that have been implemented, those that the State plans to implement, and those that the State has decided not to implement.

Figure 4: Status of recommendations.

The figure shows that 78 percent of recommendations are either implemented (32 percent) or the State has plans to implement (46 percent). Within the categories corresponding to the sections of the CDIP reports, the recommendations related to the crash production process were most likely (40 percent) to have been implemented by the States, while those related to accuracy (20 percent) were least likely. Including States' plans for implementation shows that the uniformity recommendations (100 percent) were the most likely to be implemented eventually, while those related to accessibility were the least likely to be implemented (41 percent were listed by States as "not implemented").

States provided comments for each recommendation explaining their decision to implement, plan to implement, or not implement. These are too numerous to present individually. A review of the State's responses leads to the following general conclusions:

Overall, the results of the CDIP follow up contacts provide some encouraging news–States are moving forward with existing plans that the CDIP TAT endorsed and are planning to create more formal data quality management programs, including increased data quality measurement. The barriers noted by States relate most often to a lack of resources, rather than a lack of interest or desire to improve data quality. The tendency to reject recommendations that relate to outmoded paper-management process makes sense in the context of resource constraints.

It does have one unfortunate downside which the States do acknowledge. Without measurement of the current paper-based process (especially of timeliness, accuracy, and completeness), the State will not have a good baseline against which to assess progress as the percentage of reports collected and submitted electronically increases. This is unfortunate because the comparison of paper and electronic reports can help to justify further investment in electronic data collection and submission. Ultimately, as long as a State is making progress in establishing formal data quality management processes, it will be able to quantify the benefits of its data improvement programs. In the long run, it is more important that the States establish and maintain a formal data quality management process than that they establish, in every case, a baseline measurement of the older paper-based processes.

It will also be interesting to see over the course of three to five years how many States develop the more formal data quality management processes. In the longer term, as statewide crash data systems are replaced or upgraded, the States should find it easier (and less resource intensive) to measure data quality. In fact, the CDIP TAT often included in its recommendations the idea that data quality measurements should be built into the next system update in lieu of doing it now when the effort would be largely manual, and thus resource intensive.

| <<Previous | Table of Content | Next >> |