Chapter 1 – Planning for Evaluation: Getting Started

Introduction

Evaluation in Action

California Regularly Tracks SHSP Implementation

California’s comprehensive Strategic Highway Safety Plan (SHSP) includes 17 Challenge Areas and currently 175 Actions. The SHSP Steering Committee meets bimonthly with Challenge Area Leaders, Action Leads, and other SHSP safety stakeholders to discuss implementation issues, present new actions, address challenges, and celebrate success. The State uses an electronic system (OnTrack) to track the implementation and progress of SHSP Actions, which allows Action Leads to update the status of the Actions for which they are responsible and provide updated comments on-line. A unique feature of the OnTrack system is the ability to produce a variety of tailored reports. For example, users can sort data by agency to see a summary of the status of implementation of Actions by Lead Agency. Monthly SHSP status reports are generated from OnTrack and posted, as required, on the SHSP web site. The tracking tool keeps safety stakeholders involved and provides them with a report and agenda to discuss at SHSP Steering Committee meetings and Challenge Area Team meetings. It also establishes a level of accountability and gives safety stakeholders ownership in the SHSP implementation process.

It is never too early to institute good evaluation practices; in fact, planning for evaluation should begin when the SHSP is developed. During the early stages of SHSP development attention should be given to how progress will be measured and success determined. Some essential elements to have in place prior to conducting the SHSP evaluation include:

- SHSP emphasis areas with performance measures, goals, and measurable objectives;

- Implementation or action plans for each SHSP emphasis area with action steps, countermeasures, assigned roles and responsibilities, etc.;

- Ongoing data collection and analysis; and

- Mechanisms for tracking SHSP implementation, and monitoring progress toward reaching goals and objectives.

Chances are most of these practices already are in place, providing a strong base for conducting an SHSP evaluation. The Champion’s Guidebook and IPM provide more detail on SHSP development and implementation. The steps and recommendations in these guides help create an SHSP ready for evaluation.

The EPM supports a comprehensive, high-level evaluation of the SHSP. This can occur at any point in time, but should take into consideration Federal requirements for regularly recurring evaluation, as well as the State’s needs and circumstances. Some likely times are:

- Prior to or as part of an SHSP update;

- After active SHSP implementation; or

- When an SHSP leader or other official wants to know whether the SHSP is making a difference in transportation safety.

States should always be thinking about how to gauge success and what indicators will be used to determine progress. Typically these indicators fall into two categories: process evaluation and performance evaluation.

- Process evaluation addresses the SHSP procedural, administrative, and managerial aspects and assesses progress in these areas.

- Performance evaluation addresses the outputs and outcomes resulting from SHSP implementation. It assesses the progress of SHSP implementation and the degree to which it is meeting goals and objectives.

Keeping these indicators in mind early in SHSP development and implementation will ensure important questions about the SHSP can be answered at any time.

Purpose of Evaluation Planning

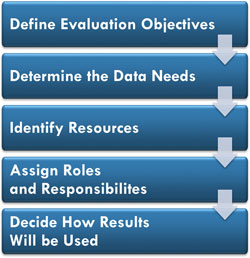

SHSP Evaluation Planning Process

Source: Cambridge Systematics, Inc.

The purpose of evaluation planning is to place managers and stakeholders in the best position to conduct the evaluation. It provides a formalized process and direction for evaluation activities, and helps answer the following questions:

- Objectives: What SHSP issues or questions need to be addressed?

- Data: What data are needed to address the objectives?

- Resources: What resources are available?

- Roles and Responsibilities: Who is responsible? Who is involved?

- Results: How and when will the results be reported and used?

Methods

This section provides more detail on planning methods.

Identify Evaluation Objectives

Program managers should determine which SHSP process and performance areas to evaluate and develop a set of objectives to focus the evaluation effort and identify the resources needed to conduct the evaluation. Once objectives are drafted and resources are identified, they should be circulated to decision-makers with authority over the program to enlist their support for the evaluation objectives and the resources needed, such as staff and funding support.

SHSP program managers will most likely develop three levels of evaluation objectives that address both process and performance issues.

Outputs: The extent to which SHSP strategies and actions are implemented.

Outcomes: The degree to which SHSP strategies and activities contribute to reducing fatalities and serious injuries, improve road user safety attitudes and behaviors, etc.

- Level 1: Process. Process-level evaluation objectives relate to the overall SHSP process, such as the organizational structure by which the program is developed, managed, implemented, and evaluated. These objectives typically focus on elements related to leadership and management structures, collaboration, communication, etc.

- Level 2: Outputs. Output-level evaluation objectives relate to how the SHSP is being implemented and how well the actual implementation matches up to the plan.

Neither process- nor output-level evaluations require elaborate data collection efforts or a research design, but they do require an understanding of what should have happened in terms of leadership and program implementation. They also require a systematic approach for tracking program/strategy implementation.

- Level 3: Outcomes. Outcome-level evaluation objectives focus on the impact the SHSP is having on transportation safety with respect to fatalities, injuries, and crashes. Changes in awareness, attitudes, and behaviors also can be addressed through outcome-level evaluation.

All three evaluation levels require program managers and leaders to develop evaluation objectives they believe will provide the most effective feedback regarding the progress of the SHSP. While an evaluation specialist can provide suggestions, the persons responsible for program effectiveness must ultimately decide what measures best indicate the overall success of the SHSP.

Table 1 illustrates evaluation objectives for the three levels. In this example, SHSP leadership determined assessing SHSP representation was a priority and established objectives to assess partner participation (level one objective). Impaired driving, occupant protection, and head on crashes were emphasis areas, so objectives were established to measure outputs and outcomes in these areas (level two and three objectives). To answer the level two and three evaluation objectives, the data must be available and tracked to measure progress.

|

EVALUATION OBJECTIVES |

||

|---|---|---|

|

Level 1: SHSP Process |

Level 2: SHSP Outputs |

Level 3: SHSP Outcomes |

|

|

|

Recommended Actions

- Determine the SHSP process and performance areas to evaluate.

- Identify the resources needed to conduct the evaluation.

- Develop evaluation objectives for assessing the SHSP process.

- Develop evaluation objectives for assessing SHSP performance (outputs and outcomes).

- Circulate the objectives and resource requirements among SHSP decision-makers to obtain their support.

Review Methods for Data Collection and Management

Crash Data Quality Characteristics

- Timeliness.

- Accuracy.

- Completeness.

- Consistency/Uniformity.

- Integration.

- Accessibility.

For more information refer to the FHWA Crash Data Improvement Program Guide.

Quality data is necessary for conducting any evaluation. Fortunately, data quality and timeliness are improving in all States due to various programs that support data improvements, such as FHWA’s Crash Data Improvement Program (CDIP) and NHTSA’s traffic records assessment. If sufficient data are not available, program managers may need to revise the evaluation objectives or implement a data collection effort. Reviewing data collection and management methods will reveal whether:

- Data are available and of sufficient quality to track and assess progress and answer the evaluation objectives;

- Resources are available to collect data currently not available; and

- Objectives for which no evaluation data are available are reconsidered or modified.

Most States have some type of tracking mechanism in place. Tracking the following elements supports process and performance evaluations:

- The implementation status of SHSP strategies and related action steps;

- Outputs from implementing the SHSP strategies;

- Number and rates of fatalities and serious injuries; and

- Changes in road user attitudes and behaviors.

Recommended Actions

- Review the existing data collection and management

methods to determine the following:

- Quality data are available to track progress and answer the evaluation questions;

- Resources are available to collect data currently not available; and

- Objectives for which no evaluation data are available are reconsidered or modified.

- Review the tracking mechanism(s) in place

to determine:

- Where the information can be obtained and what is captured (e.g., implementation status of SHSP strategies and actions, number and rates of fatalities and serious injuries, etc.).

- If information needed for the evaluation is not formally tracked, can a mechanism be put in place or information gathered using an alternative method?

Determine How to Measure Progress

Source: Cambridge Systematics, Inc.

Sufficient data are not always available to measure everything, but establishing specific evaluation objectives will help identify which data are a priority. Lack of data should not preclude the attempt to evaluate the SHSP. If quantitative data are not yet available, a qualitative evaluation can be performed. The important point is to focus on what can be evaluated.

Measuring success becomes more difficult as the measurement moves from outputs (e.g., what activities occurred, such as the number of traffic citations) towards outcomes. However, outcomes, such as a decrease in traffic crashes or crash-related injuries and deaths hold greater importance. The following list suggests performance measures that could be used in descending order of importance.

- Primary Outcome Measures. Reductions in the number and rate of fatal and serious injury crashes statewide and by Emphasis Area.

- Secondary Outcome Measures (also called Proxy Measures). Changes in observed behavior, such as safety belt and helmet use, speeding, red light running, pedestrians who jaywalk, changes in average speeds due to traffic calming countermeasures, and other observed behavior changes.

- Self Report Measures (what people say). Have you ever driven after having too much to drink? How often do you wear your safety belt?

- Attitudinal Data Measures (what people believe). Support for legislative initiatives; knowledge of safety belt laws; teen attitudes about drinking and driving; and attitudes toward roundabouts, rumble strips, and other infrastructure safety improvements.

- Awareness Data Measures (what messages people have heard). Awareness of high-visibility enforcement, perceived risk of getting a traffic ticket.

- Activity Levels (program implementation). Miles of rumble strips, pavement markings, signage, and other infrastructure improvement; citations issued by the police, special police patrols, and check points; presentations; training programs; media coverage; legislation (The National Highway Traffic Safety Administration (2008). The Art of Evaluation, page 32); etc.

If the highest levels of measurement are not available, other more obtainable measures might be considered.

Recommended Actions

- Review the existing SHSP

performance measures and categorize them as

(in descending order of importance):

- Primary outcome;

- Secondary outcome;

- Self reporting;

- Attitudinal;

- Awareness; or

- Activity level.

- Include the higher level performance measures (e.g., primary outcomes) in the evaluation if data are available (to answer the evaluation objectives) and consider other, more attainable measures if they are not available.

Identify and Secure Resources

Evaluation often requires resources, including funding, staff time, and evaluation expertise. It is important to identify and secure these resources so they are available for the program evaluation and throughout the life of the SHSP. Evaluation does not require high-level scientific or technical expertise; however, it does require people capable of objectively collecting, tracking, and analyzing data and other information to produce and interpret results. SHSP leadership should dedicate staff and financial resources to support this function.

Evaluation of other safety plans or programs, such as the HSIP, Commercial Vehicle Safety Plan (CVSP), or the Highway Safety Plan (HSP), should be underway and could provide additional resources or expertise for the SHSP evaluation effort. Universities (e.g., professors and graduate students who may be looking for evaluation projects) provide another good source of evaluation expertise.

Establish a timeframe for evaluation or a target date for completion. While most of the information and data needed for the evaluation already are collected and accessible, it will take time to gather and analyze all of the pieces and synthesize them into meaningful results.

Recommended Actions

- Identify available resources to support SHSP evaluation.

- Identify individuals or agencies with the skills to analyze data and other information and provide evaluation support.

- Explore the availability of universities, professors/graduate students, and others if extra help or expertise is needed.

- Collect information on current evaluation efforts among the partners, e.g., CVSP, HSP, etc.

- Determine a timeframe for conducting SHSP evaluation.

Assign Evaluation Roles and Responsibilities

Evaluation typically includes a management function to drive the effort and an analysis function for collecting, analyzing, and reporting data and results. These functions could be performed by a single person or by several people. Responsibilities should be clearly defined in either case and include a description of the data to be collected, the level and type of analyses to be conducted, and a reporting schedule.

Recommended Actions

- Determine the agency responsible for coordinating the overall program evaluation effort.

- Identify the individual(s) who will manage the evaluation effort, collect and analyze data, and report the evaluation results.

Report and Use Evaluation Results

Define the reporting process and describe how the results will be used to improve SHSP process and performance. The reporting process should identify the parties responsible for generating and distributing the results, the audiences who will receive the information, when will they receive it, and how it will be presented (e.g., spreadsheets, formal written documents, verbal updates, e-mail notices, etc.). Assign a lead person responsible for generating and distributing evaluation results.

States should document how they intend to use the results, e.g., to identify successes, gaps, challenges, and opportunities. At a minimum use the results to review and, where necessary, improve SHSP process and performance. Other uses of evaluation results include informing elected officials, engaging safety stakeholders, and reaching out to a broader audience, e.g., the public. For more information on how to use evaluation results, see Chapter 4.

Recommended Actions

Source: Federal Highway Adminstration.

- Identify the parties responsible for generating the evaluation results.

- Assign a lead person to pull together the results from the various parties and distribute them to the appropriate committees, agencies, etc.

- Determine who will receive the results of the evaluation, i.e., agency executives, Steering Committee members, all stakeholders, etc.

- Decide how the results will be formatted, i.e., formal reports, spreadsheets, PowerPoint presentations, etc.

- Identify how the evaluation results will be used.

Document Evaluation Approach

States should document the evaluation approach. This need not be a long or complicated plan. It could be as simple as a one-page description of the evaluation objectives, data needs, resources, roles and responsibilities, and methods for applying the results.

Documenting these elements of the evaluation will formalize the process, keep the evaluation focused on the original objectives, record details of the evaluation, such as the specific tasks and roles and responsibilities, establish a level of accountability, and serve as a reference for new leadership and staff.

Recommended Actions

- Document the evaluation elements (objectives, data needs, resources, roles and responsibilities, application methods, etc.) to formalize the process.

Evaluation Planning Checklist

The following checklist is designed to support evaluation planning. If most or all of these activities are completed, the State is prepared for SHSP evaluation.

- Identify evaluation objectives.

- Identify the data needed to address the objectives and perform the evaluation.

- Determine if existing data collection strategies are sufficient for evaluation.

- Identify resources needed to collect data or adjust evaluation objectives if available data are insufficient for evaluation purposes.

- Assign responsibility for generating and distributing evaluation results.

- Document a reporting process to update agencies, partners, and decision-makers on SHSP evaluation results.

- Determine how evaluation results will be applied.

- Document the approach or plan for the evaluation.