Chapter 3 – Performance Evaluation: Outputs and Outcomes

Introduction

Over the past 15 years, State DOTs, SHSOs, Metropolitan Planning Organizations (MPOs), and other government agencies have increased the use of performance management principles to plan, prioritize, track, and improve the effectiveness of their programs. Performance management and the use of performance measures assess the outputs and outcomes resulting from SHSP implementation.

Purpose

The purpose of performance evaluation is to determine how effective the SHSP has been in meeting its goals and objectives. Performance evaluation compares the actual degree of SHSP implementation (output evaluation) and the degree to which the implemented strategies have contributed to (or are correlated with) measurable change (outcome evaluation).

Output Evaluation

Output evaluation is defined as determining the extent to which SHSP strategies and actions are implemented and outputs are produced; in other words, it measures progress and productivity. Identifying the degree to which the SHSP is implemented is not only a necessary step to determine SHSP outputs, it also will help determine if implementation is impacting SHSP outcomes.

It is common for an SHSP to generate emphasis area action plans that identify strategies and actions to be accomplished, responsibility for completing each action, and expected completion dates. States that regularly track SHSP implementation progress are well positioned to perform output evaluation. The expected or planned timeline for completing SHSP actions and the level of planned activity forms a baseline. Performance evaluation based on outputs compares the actual degree of SHSP implementation to this baseline.

Examples of output performance measures include:

- The number of high-visibility enforcement campaigns;

- The number of public service announcements aired (earned media);

- The number of intersections with improved pavement markings; and

- The number of center line miles with cable median barrier, rumble strips, etc.

Output Evaluation Methods

Performance Measures

Legislation requires the U.S. Department of Transportation to establish performance measures for the Federal-aid program. In the area of safety these measures are the number and rate of serious injuries and fatalities (23 U.S.C. 150). States will be required to set targets for and report on these performance measures, so they should be considered when developing SHSP performance measures as well.

NHTSA and the Governor’s Highway Safety Association (GHSA) have also developed a set of core performance measures, including:

- Number of traffic fatalities (three-year or five-year moving averages);

- Number of serious injuries in traffic crashes;

- Number of speeding-related fatalities; and

- Number of pedestrian fatalities.

For a list of all NHTSA/GHSA performance measures, see: Traffic Safety Performance Measures for States and Federal Agencies.

States can also review A Primer on Safety Performance Measures for the Transportation Planning Process developed by FHWA for help in creating safety performance measures.

Output evaluation helps determine the status of or the extent to which SHSP strategies and actions are implemented (e.g., not started, partially implemented, fully implemented, etc.). The idea is to track performance measures that relate to progress made in implementing the SHSP. For example, if a State has determined lane departure crashes are responsible for a significantly high proportion of fatal crashes, it may decide to implement a variety of countermeasures to keep vehicles in their lanes. One of these might be the installation of shoulder and centerline rumble strips. The performance measure is the number of miles of rumble strip installed. Some States also may decide to track spending on rumble strip installation as a separate performance measure. In both cases it is important to collect and report the data.

Many infrastructure-related countermeasures require multiple years for completion. In those cases, progress milestones can be developed and tracked, such as identifying promising sites for installing the countermeasure. These milestones can, at a minimum, show progress towards a performance measure.

Outcome Evaluation

Outcome evaluation measures the degree to which SHSP goals and objectives are being met and whether there is a reduction in fatalities and serious injuries, improvement in road user safety attitudes and behaviors, etc. In other words, it can help answer the question, “Are we doing the right things?”

Typical outcome performance measures relate to the number and rate of crashes, fatalities and serious injuries, observed behavior, emergency response times, public perceptions of safety for the various transportation modes, etc. Safety issues vary across the country; therefore, no single set of safety performance measures is applicable to all States (FHWA. (2010). A Primer on Safety Performance Measures and the Transportation Planning Process).

Outcome evaluation is challenging because the link between SHSP implementation and crash reduction is indirect. Causality is difficult to establish because scientific evaluation conditions and controlled studies are simply not possible in the transportation safety field. However, while it is not possible to conclusively demonstrate that action A “caused” condition B, correlations between conditions A and B can be identified and used in outcome evaluation.

Even though establishing causality is difficult, statistical analysis to measure program outcomes is feasible and can provide important information for identifying which efforts are likely contributing to positive safety outcomes. However, it is clear the SHSP process has resulted in multiagency, multidisciplinary, multimodal programs nonexistent before SHSPs were legislatively required. Interactive, resource sharing, and multidisciplinary programs are complex and difficult to implement, manage, and sustain, but recent experience in the U.S. proves it is possible and can be very successful.

Source: Cambridge Systematics, Inc.

Performance evaluation based on outcomes determines whether the strategies and actions contribute to (or are correlated with) measurable change. The ultimate evaluation question is whether the SHSP has achieved the intended results in terms of reducing fatalities and serious injuries on the roadways. Outcomes also may measure improvements in road user safety attitudes, awareness, and behaviors. If countermeasure implementation costs are available, a benefit cost analysis can be performed to compare implementation costs to the economic results of injury and fatality reduction. Performance evaluation based on outcomes consists of reviewing performance measures and comparing them to baseline data. In this case, baseline data on selected performance measures is gathered prior to SHSP implementation (e.g., fatality and injury numbers and or rates, awareness measures, observational survey results, etc.).

Performance measures for outcome evaluation typically include the following:

- Overall fatalities and serious injuries;

- Fatalities and serious injuries by emphasis area;

- Observed behavior, e.g., annual safety belt observations; and

- Knowledge and awareness.

SHSPs encourage multidisciplinary approaches resulting in multiple strategies, which often makes it difficult to separate the effect of a single strategy.

Nevertheless, the impact of a combination of strategies can be assessed at the program level. If spending also is tracked, benefit cost ratios can be calculated improving future resource allocation and focusing limited resources on the programs with the greatest potential impact.

Outcome Evaluation Methods

Outcome performance measures use trend analysis, benefit/cost analysis, survey, and other data to shed light on performance outcomes, e.g., fatalities, serious injuries, etc. These measures reveal the extent to which actions are affecting safety outcomes. Performance evaluation compares outcome data on SHSP objectives to baseline data to determine the degree to which objectives are met.

Trend Analysis

As noted previously, trend analysis can be used to set objectives, but it also can be used to track progress over time. Most States measure and track safety by monitoring multiple years of fatality and serious injury data.

To measure safety outcomes, most States focus on serious injury crashes. Agencies typically calculate the number and rate of fatalities and serious injuries as a general statistical measure. Some States also calculate these statistics by emphasis area to identify areas where progress is being achieved, as well as areas where the numbers do not appear to be moving or are moving in the wrong direction. Performance evaluation in this case consists of comparing crash outcomes with previously set objectives at both the overall and emphasis area levels.

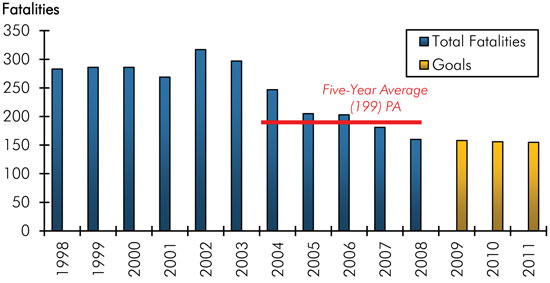

Figure 4 shows the progress in one of Pennsylvania’s emphasis areas. When Pennsylvania developed an SHSP in 2006, one of the goals in the infrastructure improvements emphasis area was to reduce head-on crashes, the most severe type of crash. On average, head-on crashes accounted for 17 percent of total fatalities, but only 4 percent of all reportable crashes in Pennsylvania. The State’s objective was to reduce head-on fatalities to 200 in 2008. The fatality data show Pennsylvania met the objective; in fact they reached it early.

Figure 4. Head-On Fatalities: Historical Fatality Data and Future Goals

Source: Cambridge Systematics, Inc. based on data from Pennsylvania’s Strategic Highway Safety Plan.

Crash trends can provide an indication of overall safety performance, but may not provide enough data to attribute crash reductions to a specific SHSP strategy because other programs or environmental factors, such as an increase in population, a decrease in exposure, etc., also are contributing to the reduction. For example, implementing the recommendations from a corridor safety study may result in not only engineering improvements, such as rumble strips, but also public education on the importance of buckling up and high-visibility speed enforcement. In this case, tracking performance based on rumble strip installation most likely would over estimate the amount of change associated with the countermeasure because it would not take into account increased safety belt use and speed reductions.

This mingling of impacts from multiple strategies and countermeasures sometimes has the unintended consequence of keeping ineffective countermeasures in place. For example, consider the combination of an effective and an ineffective countermeasure on crash reduction. If both were equally funded, a reduction in crashes could be achieved, yet because it is difficult to ascribe the reduction to the correct countermeasure, it may appear both countermeasures were effective. For this reason, it is important to use proven effective countermeasures and conduct evaluations of those countermeasures with less evidence of effectiveness.

Attitude and Behavior Analysis

Many SHSPs contain emphasis areas, strategies, and actions designed to change attitudes and improve safety behavior, such as high-visibility enforcement programs, public- and school-based education programs, etc. Typically, these programs attempt to increase the use of occupant protection, reduce impaired, aggressive, or distracted driving, etc. Attitude and behavior change can be measured by random observational and telephone surveys (One commonly used observational survey technique is the annual statewide safety belt use survey managed by NHTSA). Survey data can be used not only to measure performance, but also to construct programs. For example, if survey data show the public strongly supports tougher impaired driving laws, the results could be used to educate and inform elected officials.

Benefit/Cost Analysis

Evaluation in Action

Pennsylvania Uses Benefit/Cost Analysis to Determine SHSP Priorities

Pennsylvania compiled a list of countermeasures where the State had invested significant resources, such as safety belts. A review of the data revealed the initial infusion of resources increased the use rate from 76 percent in 2002 to 86 percent in 2010; however, continued spending had not pushed the rate much above the 86 percent mark. This prompted the State to review current counter-measures and determine whether other promising strategies should receive support from the State’s limited resources. The review resulted in a realignment of funding among the SHSP emphasis areas and a grantee requirement to justify requests in terms of effectiveness in reducing traffic-related fatalities and serious injuries.

Conducting a program-level benefit/cost analysis of SHSP-related efforts is difficult to accomplish because of the complexity of SHSPs and the difficulty of measuring the costs and benefits of many behavioral programs. However, if benefit/cost analysis is conducted on a large number of related projects, such as a statewide program of projects to improve or restore the super elevation of large numbers of horizontal curves, it could provide some level of overall SHSP assessment (e.g., the benefits of the program have outweighed the costs), and it provides some indication the program has shown success in improving safety. Typically, program benefit/cost analysis is conducted using three years of data for both before and after implementation of improvements.

The primary use of benefit/cost analysis is to direct resources to program areas with the greatest potential to improve safety. Additional information on benefit/cost evaluation methods at the individual project level can be found in FHWA’s Highway Safety Improvement Program Manual.

Recommended Actions

- Assemble data for assessing output and outcome performance measures.

- Identify missing data which may prevent assessment of performance measures.

- Determine if other performance measures can be used to determine progress.

- Document baseline data.

- Determine the output and outcome measures for each SHSP emphasis area.

- Compare output performance measures with baseline data.

- Compare outcome performance measures with baseline data.

- Compare observation and/or telephone survey results to measure changes in awareness, attitudes, and behaviors.

- Collect and review the data available for benefit/cost analyses.

- Conduct program-level benefit/cost analyses where feasible.

Self-Assessment Questions

The following self-assessment questions are designed to inform performance evaluation. Answering “yes” to a question indicates the State’s SHSP has been effective or successful in this area of performance evaluation. Answering “no” indicates improvements can be made.

- Has the current status of all output and outcome performance measures been gathered and reviewed?

- Are the performance measures clearly related to SHSP goals and objectives?

- Are the numbers and rates of fatalities and serious injuries used as general statistical measures?

- Are the numbers and rates of fatalities and serious injuries tracked and reported by emphasis area and compared to previously set objectives?

- Have fatality and serious injury objectivesbeen met?

- Are observation and/or telephone survey data collected and analyzed to track changes in awareness, attitudes, and behaviors?

- Have awareness, attitude, and behavior objectives been met?

- Are program-level benefit/cost analyses conducted

on certain SHSP

programs?

- If so, have the benefits of the program(s) outweighed the costs?